Experimenting with Stable Diffusion on my own machine

By Eric — — 2 minute readAI generated artwork has been in the news a lot lately, from the person who won an art contest with a computer-generated image, to Microsoft recently announcing that they'd include AI image generation in their Bing search engine. I've fiddled a little with online services for AI image generation, but I wanted to try it out on my own machine where I wouldn't be limited by using the free tier of a service.

Being a programmer, I thought I'd go to the source and try to run the code from the original Stable Diffusion GitHub repository. The instructions in the README weren't super-clear, but I was able to get it running and try the suggested image prompt:

python scripts/txt2img.py --prompt "a photograph of an astronaut riding a horse" --plms

The results were not very satisfactory:

I discovered that someone had created a different repository that was much easier to get running. It has a web-based UI that surfaces the various parameters that modify the image generation process, most of which I currently don't understand. As I play around more (perhaps picking a constant seed for randomization), hopefully figure more out. But at least I was in business:

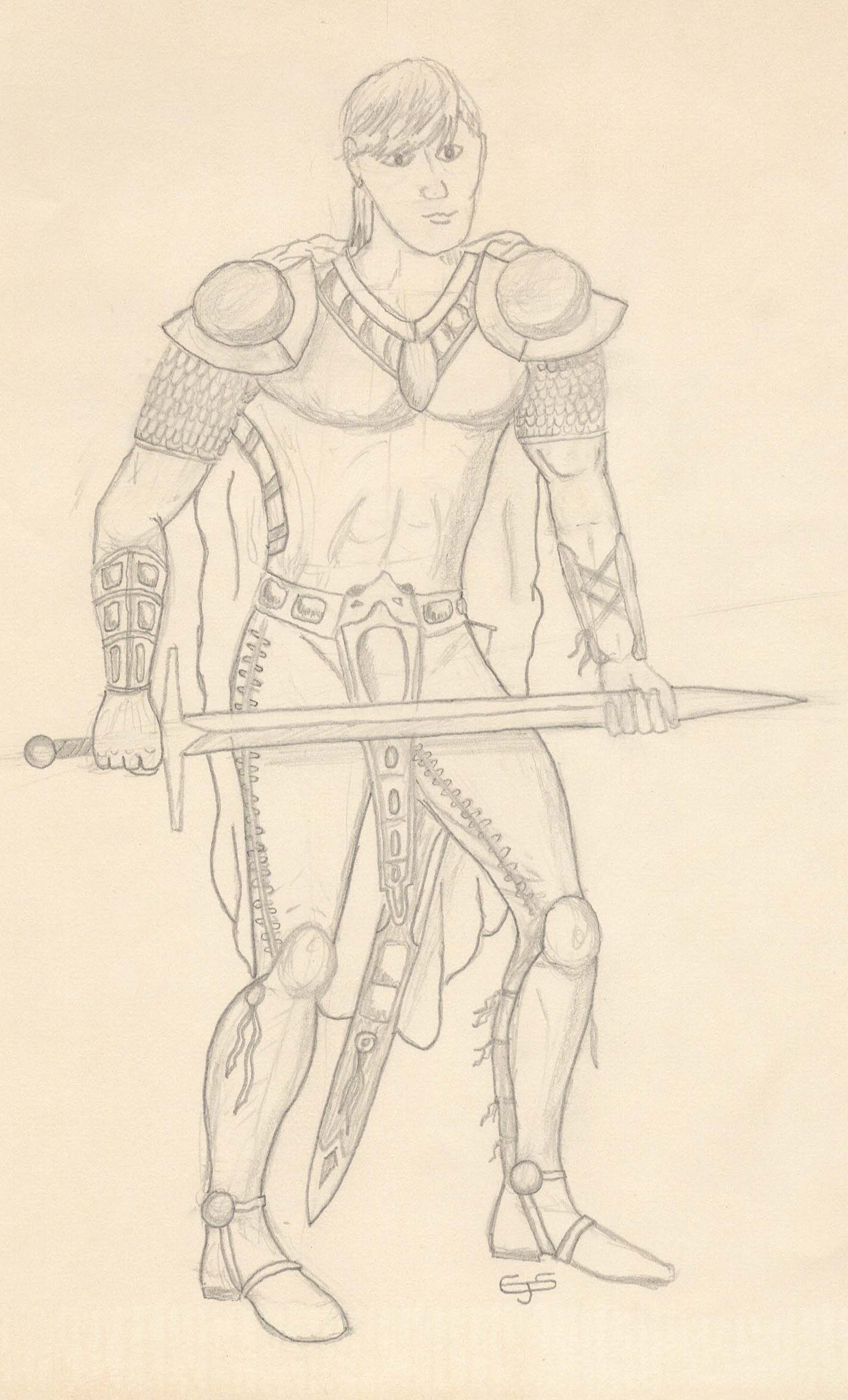

One feature I was especially interested in was the ability to generate images from an existing image, so I scanned a drawing I'd done many years ago as my source:

For the text prompt, I basically tried to describe the image, like "leather armor, sword, fantasy warrior".

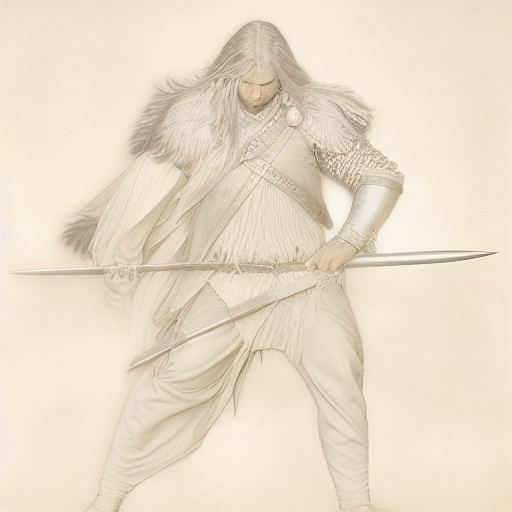

"Denoising strength" is the parameter that controls how much of the original image is used. At around 0.33, I get nearly a copy of the original image (though a bit squashed since the generated images are always 256x256):

Setting the strength above 0.5 gives more "creative" results but that are still (mostly) recognizable as something like my original drawing. The color is especially sticky, even when I tried to prompt with "full-color".

So far I think the skilled artists are safe from being replaced by AI (certainly as wielded by me). Unlike with GitHub Copilot, even if you get something sort of like what you want, it's not as easy to edit an image generated by AI as it is to edit text generated by AI.